Dense 3D Mapping Based on ElasticFusion and Mask-RCNN

December 14, 2018

Demo Video

Project Goal

The goal of this project is to build a simultaneously localization and semantic mapping (SLAM) system.

Source Code:

All C++ source code is available on my GitHub Page.

Pipeline:

-

A real-time dense visual SLAM (ElasticFusion) system to generate surfel map.

-

A segmentor based on Mask-RCNN to do semantic segmentation on input 2D RGB streams, and then project semantic segmentation label from 2D pixel to surfel on 3D dense map.

-

A bayesian update scheme to refresh the semantic segmentation results on existing surfels.

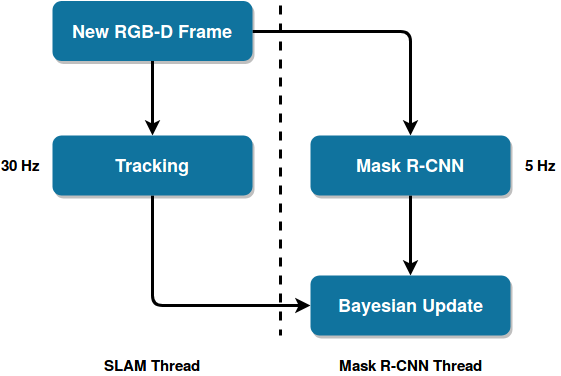

The system flow is below:

1. Tracking

I employ ElasticFusion [1] as the pipeline’s SLAM part. It gives the camera pose estimation by minimise the joint geometric and photometric cost function:

\begin{equation} E_{track}=E_{icp}+w_{rgb}E_{rgb} \end{equation}

The geometric cost function is given by iterative closest point (ICP) algorithm:

\begin{equation} E_{icp}=\sum (\textbf{v}^{k}-exp(\hat{\xi})\textbf{T}\textbf{v}_{t}^{k})\cdot \textbf{n}^{k})^2 \end{equation}

The photometric cost function represent the cost over the photometric error (intensity difference) between pixels:

\begin{equation} E_{rgb}=\sum_{\textbf{u}\in\Omega}(I(\textbf{u},C_{t}^{l})-I(\pi(\textbf{K}exp(\hat{\xi})\textbf{T}\textbf{p}(\textbf{u},D_{t}^{l})),\hat{C}_{t-1}^{a}))^2 \end{equation}

where as above is the current estimate of the transformation from the previous camera pose to the current one.

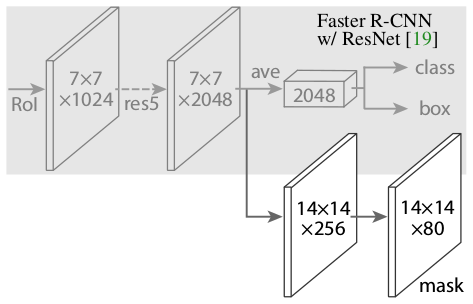

2. Mask R-CNN

Mask R-CNN [2] serves as the segmentation part. It provides superior segmentation quality of at most 80 different classes at 5Hz frame rate. Its outputs is a set of binary mask within corresponding instance’s class ID and confidences. Mask R-CNN’s architecture is below:

Here are all 80 different classes Mask R-CNN can do instance segmentation:

The Mask R-CNN doesn’t output each classes confidence of the pixel, which is required by the Bayesian Update part. So I do the following process of the Mask-R-CNN outputs (bin masks , corresponding and to get the confidence of the th class for pixel :

3. Bayesian Update

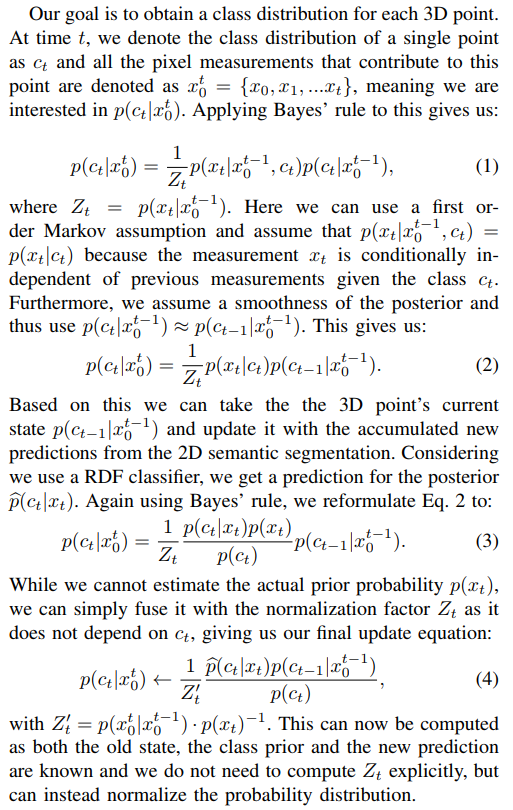

In the 3D surfel map generated by ElasticFusion, each surfel stores a discrete probabilities distribution, over the set of class labels. Each newly generated surfel is initialised with a uniform distribution over the semantic classes, as it begin with no a priori knowledge.

Once Mask R-CNN thread make the inference of done, we get a per-pixel independent probability distribution over the class labels , then the following recursive Bayesian update [4] will update all class label probabilities per surfel, and finally normalise with to yield a proper distribution.

4. Future Work

Mask R-CNN do execellent in segment the main part of the object from background, but it can not give a perfect boundary of the instance. However, those boundary are usually discontinuities in depth value. So maybe we can refine the segmentation results by doing geometric segmentation given Mask R-CNN inference and depth frame.

Reference

[1] ElasticFusion: Real-Time Dense SLAM and Light Source Estimation, T. Whelan, R. F. Salas-Moreno, B. Glocker, A. J. Davison and S. Leutenegger, IJRR ‘16

[2] Mask R-CNN, Kaiming He, Georgia Gkioxari, Piotr Dollar, and Ross Girshick, ICCV ‘17

[3] Kolkir C++ implementation of mask-rcnn.

[4] SemanticFusion: Dense 3D Semantic Mapping with Convolutional Neural Networks, J. McCormac, A. Handa, A. J. Davison, and S. Leutenegger, ICRA ‘17